The World Health Organisation (WHO) has issued new guidance on the ethics and governance of large multi-modal models (LMMs). The guidance details over 40 recommendations for governments and companies involved in healthcare and technology, and covers both the design and deployment of LMMs.

So what are LMMs? LMMs, also known as “general-purpose foundation models”, are a type of generative AI. LMMs can accept one or more type of data input and generate diverse outputs that are not limited to the type of data fed into the algorithm. Good examples include ChatGPT, Bart and Bert.

We’ve set out our top five talking points from the guidance below.

- How could LMMs be used in healthcare? The guidance outlines five broad applications of LMMs for health:

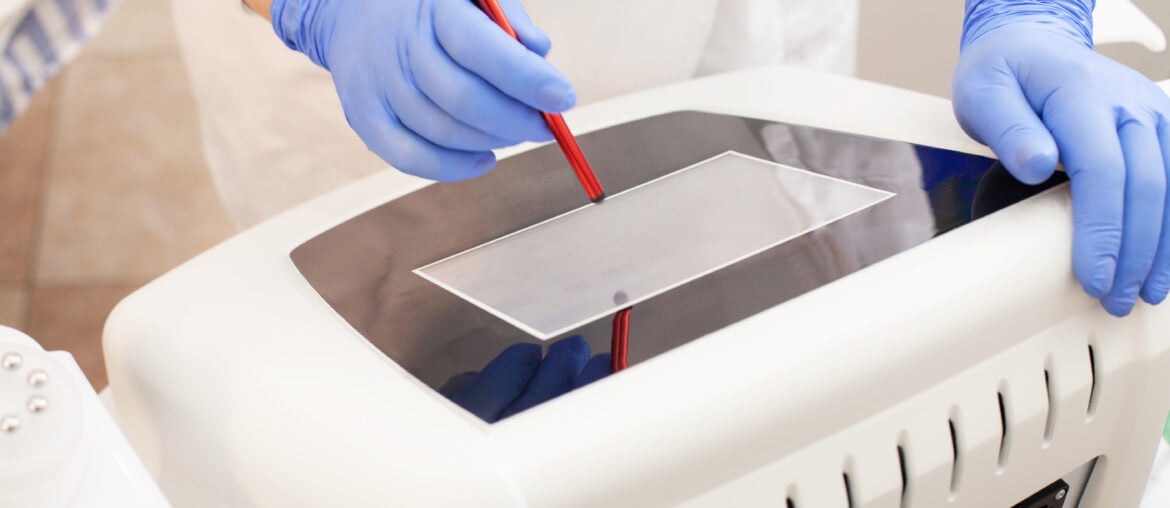

- diagnosis and clinical care, such as responding to written queries from patients, and assisting clinicians with diagnosis in fields such as radiology and medical imaging.

- patient-guided use, such as investigating symptoms and treatment via chatbots.

- clerical and administrative tasks, including filling in missing information in electronic health records, and drafting clinical notes after patient visits.

- medical and nursing education, including providing trainees with simulated patient encounters.

- scientific research and drug development, such as analysing electronic health records to identify clinical practice patterns.

Each of these use cases present specific risks that require mitigation. For example, patient-centred applications present risks of false or biased statements; privacy concerns; and a question as to whether patient-facing technologies are subject to appropriate regulatory scrutiny.

- What are the ethical concerns and risks to health systems and society? Key concerns include:

- Accessibility and affordability: Factors undermining equitable access to LMMs include: the digital divide (limiting use of digital tools to certain populations); fees or subscriptions required to access certain LMMs (limiting access in resource-poor environments); and that most LMMs operate only in English, further limiting access.

- System-wide biases: In general, AI is biased towards the populations for which there are most data. This means that, in unequal societies, AI may disadvantage women, ethnic minorities and the elderly.

- Impact on labour and employment: The guidance points to estimates that LMMs will eventually result in the loss (or “degradation”) of at least 300 million jobs.

- Societal risks in concentrating power of industry: LMMs will reinforce the power and authority of a small group of technology companies that are at the forefront of commercializing LMMs. These companies may exploit regulatory gaps and a lack of transparency, and may not maintain corporate commitments to ethics.

- Environmental risks: LMMs have a negative impact on the environment and climate, given the carbon and water used in training and deploying LMMs.

- Recommendations for LMM developers: Recommendations include ensuring:

- the quality and type of data used to train LMMs meets core ethical principles and legal requirements, including undertaking data protection impact assessments, ensuring data is unbiased and accurate, and avoiding use of data from third parties such as data brokers.

- ethical design and design for values. Design and development should not solely involve scientists and engineers, but also potential end-users and all direct and indirect stakeholders, such as healthcare professionals and patients.

- all possible steps are taken to reduce energy consumption, such as by improving a model’s energy efficiency.

- Recommendations for governments and healthcare providers: These include considering:

- the application and enforcement of standards, including data protection rules, that govern how data will be used to train LMMs. The guidance flags the requirement that data obtained to train LMMs are obtained and processed only where there is an appropriate legal basis to do so.

- the introduction of registration requirements, audits, and pre-certification programmes that impose legal obligations and establish incentives to require developers to identify and avoid ethical risks.

- requiring notification and reminders to end-users that the content was generated by a machine and not a human being.

- Recommendations for deployers of LMMs: Deployers are responsible for avoiding or mitigating risks associated with use of an LMM:

- deployers should use information from developers or providers to decide not to use an LMM or application in an inappropriate setting, because of biases in the training data, contextual bias that renders the LMM inappropriate, or other avoidable errors or potential risks known to the deployer.

- deployers should clearly communicate any risks that they should reasonably know could result from use of an LMM and any errors or mistakes that have harmed users. Deployers may be responsible for suspending use or removing an LMM from the market to avoid harm.

- deployers can take steps to improve the affordability and accessibility of an LMM, such as ensuring that pricing corresponds to the capacity of a user to pay, and that the LMM is available in multiple languages.